Kimi K2 Just Dropped and It's Already Breaking the AI Market

Kimi K2 is the commoditization of AI happening in real-time. When trillion-parameter agentic intelligence becomes available at commodity prices, the game changes overnight.

Beijing-based startup Moonshot AI just open-sourced Kimi K2, and the AI community is losing its mind. Within hours of release, it hit #1 trending on Hugging Face, and for good reason, this thing is a beast. Kimi K2 is a 1 trillion parameter mixture-of-experts model with 32 billion activated parameters that's specifically designed for "agentic intelligence." Translation: it doesn't just answer questions, it actually does stuff.

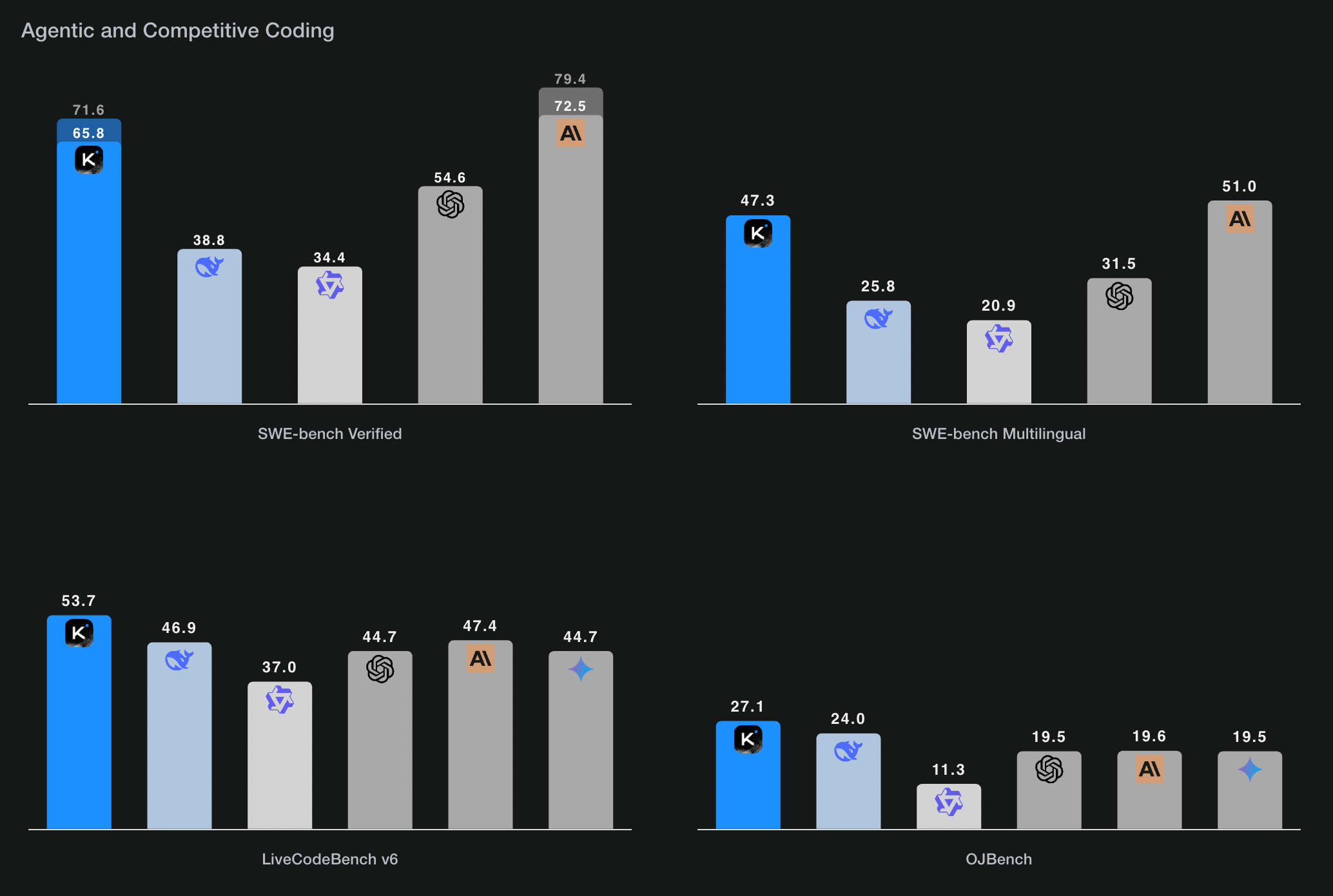

The benchmarks tell the story. Kimi K2 outperforms Claude Sonnet 4, GPT-4.1, and other frontier models on coding tasks like SWE-bench Verified (65.8% vs Claude's 72.7%), tool use benchmarks, and mathematical reasoning. But here's the kicker it's completely open source. No API limits, no usage restrictions, just pure computational power that you can run yourself.

What makes Kimi K2 special isn't just the raw performance. This model was built from the ground up for agentic tasks. It can analyze salary data through 16 IPython calls, generate interactive websites, build 3D Minecraft clones in JavaScript, and orchestrate complex multi-tool workflows. The examples Moonshot shared are genuinely impressive: automatically planning Coldplay tours through 17 tool calls spanning search, calendar, Gmail, flights, and restaurant bookings.

The technical innovation behind Kimi K2 is equally fascinating. They developed the "MuonClip" optimizer that solved training instability issues when scaling up their previous Muon optimizer. They also created massive agentic data synthesis pipelines and general reinforcement learning systems that work on both verifiable and non-verifiable tasks.

But the real excitement started when Kimi K2 became available on Chutes, the Bittensor-powered inference platform. Casper Hansen, an open-source contributor, pointed out that "The only reason Kimi K2 is not on OpenRouter is that Chutes has not hosted it yet. Actually amazing lol." Jon Durbin from Chutes quickly responded that they were working on deployment, initially requiring 16 H200s but managing to get it running on 8xH200 with 65k context length.

The only reason Kimi K2 is not on OpenRouter is that Chutes has not hosted it yet. Actually amazing lol

— Casper Hansen (@casper_hansen_) July 11, 2025

It seems, according to deploy docs, the minimum viable configuration is 16 h200s. Unfortunately we don't support tying nodes together (yet), but b200/mi300x support is just around the corner. I think this is the perfect use case for our first deployments of those GPUs. https://t.co/do9tLwgqtQ

— Jon Durbin (@jon_durbin) July 11, 2025

The market implications hit immediately. Florian S noted that Kimi K2 on Chutes is "way faster and way cheaper than all other providers listed on OpenRouter. Once again shows the superior performance of Chutes and Bittensor as a platform." This is the commoditization of AI happening in real-time. When a model this powerful becomes available at commodity prices through decentralized infrastructure, it changes everything.

Jacob Steeves, co-founder of The Opentensor Foundation (the foundation behind Bittensor), captured the moment perfectly: "Everything is trending to commodification. We're just there already." The memes started flowing immediately, because this is X, where tech announcements get turned into comedy gold faster than you can say "gpt-4-killer." People were already joking about the timing, especially with OpenAI having repeatedly teased open-weight model releases throughout 2025 only to delay them multiple times for "safety testing."

Sam Altman and his team at OpenAI were ready to launch open weights model, but…. pic.twitter.com/JiEIc5SSpx

— Prashant (@Prashant_1722) July 12, 2025

What this means for developers and businesses is profound. Kimi K2 offers frontier-level agentic capabilities without the vendor lock-in, usage limits, or astronomical costs of proprietary models. You can fine-tune it, modify it, and deploy it however you want. The model supports both base and instruct variants, comes with comprehensive tool-calling capabilities, and includes detailed deployment guides for vLLM, SGLang, KTransformers, and TensorRT-LLM.

The timing couldn't be better. As AI moves from chatbots to autonomous agents, having access to genuinely capable open-source models becomes critical. Kimi K2 isn't just matching proprietary models in many cases, it's beating them. And it's doing so while being completely transparent about its training, optimization techniques, and limitations.

This is what the future of AI looks like: powerful, open, and accessible. When a 1 trillion parameter agentic model becomes a commodity overnight, the entire industry has to recalibrate. The question isn't whether open source will eventually match proprietary AI it's whether proprietary AI can justify its premium when models like Kimi K2 exist.