One Does Not Simply... Wait, Templar Just Did: 70B AI Storms Big Tech's Mordor

The banners are raised, as Templar put it. The campaign has begun. And Big Tech's monopoly on frontier AI development just became a lot less certain.

Templar (Subnet 3 on Bittensor) launched the world's first fully decentralized, permissionless training run of a 70 billion parameter AI model. This is no mere milestone, it is the first blow struck against the throne of centralized power, a challenge to the very lords who have claimed dominion over the realm of artificial intelligence.

While Meta, OpenAI, and Google spend billions on data centers and exclusive compute clusters, Templar just proved that distributed miners across the internet can coordinate to train models at the same scale.

The banners are raised, the gates of the citadel swing wide. With v2.1.0, Templar rides out on its greatest campaign yet — the world’s first fully decentralized, permissionless 70B parameter training run.

— templar (@tplr_ai) September 12, 2025

What was once only tested in skirmishes now becomes a battle march into… pic.twitter.com/CvyiRmTVa5

Training a 70 billion parameter model is typically the exclusive to companies with unlimited resources and massive infrastructure investments. Until today, no decentralized network had attempted anything close to this scale. Templar's previous tests maxed out at smaller models, 1.2B and 8B parameters which served as proof-of-concept runs.

The jump to 70B represents an exponential increase in complexity. The training corpus spans approximately 1.5 trillion tokens, divided into 14 shards of roughly 107 billion tokens each. Every shard requires 400GB of storage. This is enterprise-scale AI development happening across a permissionless network of independent miners.

Coordinating distributed training at this scale requires solving problems that traditional AI labs never face: heterogeneous compute resources, variable network connections and economic incentives for honest participation.

Templar's breakthrough builds on several key innovations. Their SparseLoco optimizer reduces communication overhead while maintaining training effectiveness. Traditional distributed training requires constant synchronization between nodes, creating bandwidth bottlenecks. SparseLoco enables effective training with dramatically reduced communication requirements.

Their incentive mechanism ensures miners (independent or team of developers) are rewarded for contributions that actually improve model performance. Validators measure the loss improvement from each miner's gradients, calculating scores based on performance gains and updating OpenSkill ratings using the PlackettLuce model. These ratings determine weights set on the blockchain, creating economic incentives aligned with training objectives. Miners get paid for making the model better, not just for participating.

Using Discrete Cosine Transform (DCT) and top-k selection, Templar compresses gradients by orders of magnitude without losing essential information. This allows effective coordination across internet connections that would be impossible with naive approaches. The system saves frequent checkpoints, meaning restarts don't lose progress.

The successful launch represents a fundamental shift in how frontier AI models can be developed. No longer do you need billions in capital and exclusive access to compute resources to train state-of-the-art models. Any organization or collective can coordinate distributed training through decentralized networks.

Centralized AI development is vulnerable to government interference, corporate decisions, and platform policies. Decentralized training creates models that no single entity can control or shut down. When training costs drop and barriers disappear, more experimentation becomes possible.

The contrast with traditional AI development is stark. OpenAI's GPT models are trained on centralized clusters with coordinated hardware and unlimited budgets. Meta's Llama series is developed in internal data centers with homogeneous hardware configurations. Templar's 70B run is distributed across independent miners with heterogeneous hardware, variable network connections, and economic incentives replacing corporate coordination.

The fact that Templar can achieve comparable scale through decentralized coordination demonstrates that the centralized model isn't technically necessary it's just how the industry developed historically.

If Templar's 70B training run succeeds, reaching completion with competitive performance metrics it will prove that decentralized networks can match centralized AI development at frontier scale. This would represent a shift comparable to how Bitcoin demonstrated that digital money could work without central banks.

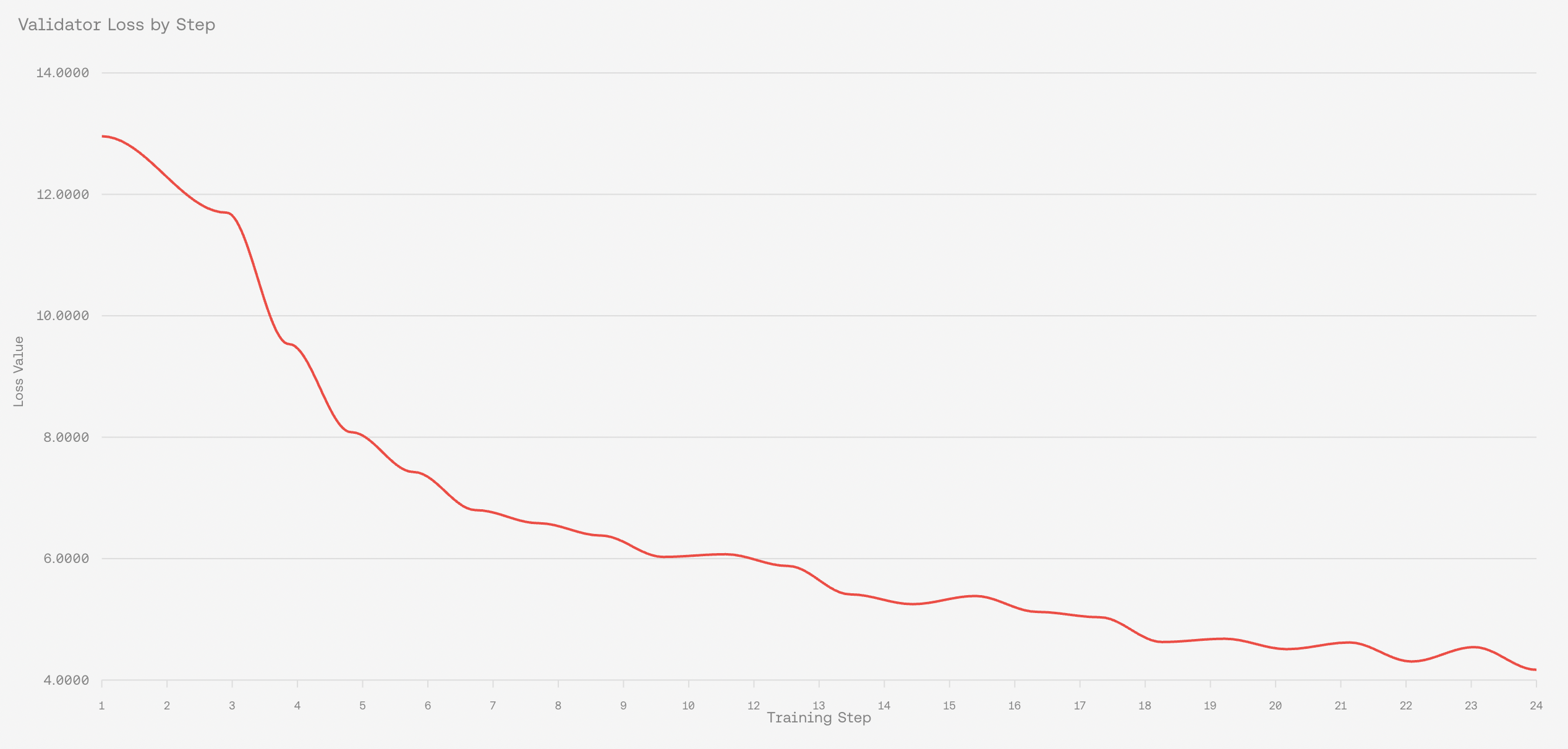

The training uses Bits per Byte (BpB) as the evaluation metric, providing consistent measurement across different tokenizer vocabularies. The validator loss chart shows Templar's success in practice, dropping from 13 to 4.2 over 24 training steps. This smooth decline proves their OpenSkill-based incentive system works: miners get rewarded for contributions that actually improve the model, creating economic coordination that replaces corporate management.

Success would validate that decentralized coordination can handle frontier-scale AI development, economic incentives can replace corporate management for complex technical projects, and the barriers to advanced AI development are lower than previously assumed.

Successful decentralized training at 70B scale would challenge AI monopolization by proving that anyone can coordinate training of frontier models. Global participation becomes possible when developers worldwide can contribute to frontier AI development rather than depending on access granted by tech companies. Distributed development creates resilience against single points of failure, whether technical, economic, or political.

We're witnessing the emergence of an alternative model for AI development one where coordination happens through economic incentives rather than corporate hierarchies, where participation is permissionless rather than exclusive, and where the resulting models belong to networks rather than companies.

Whether Templar's specific run succeeds or not, the precedent has been set. Decentralized AI training at frontier scale is no longer theoretical it's happening. And that changes everything about who can build the future of AI.

The banners are raised, as Templar put it. The campaign has begun. And Big Tech's monopoly on frontier AI development just became a lot less certain.