This Week in AI: Chutes Chat Launches, Templar Breaks Training Records, and Tech Leaders Dine with Trump

September 1-5, 2025 The open source AI ecosystem had a busy week. Chutes dropped their chat app in beta, Templar smashed distributed training records, Switzerland launched a national AI model, and Trump hosted Big Tech CEOs for dinner while Elon Musk was notably absent. Here's what you need to know.

Chutes Chat Goes Live: 100+ Models in One Interface

Chutes AI (Subnet 64 on Bittensor) just launched Chutes Chat in beta, and it's exactly what the decentralized AI space needed. The platform gives users access to hundreds of LLMs, VLMs, image, and audio models working together in a single chat interface.

The standout feature is Chutes Auto, their solution to choice paralysis. Instead of forcing users to pick from dozens of models, Chutes Auto automatically selects the best open-source model for each task. Smart move when you're dealing with the overwhelm of infinite options.

Current features include all live Chutes models, image generation and editing, web search, PDF uploads, memory storage, and voice input/output. Coming soon: voice mode, agent mode, deep research, and code mode.

The timing couldn't be better. On August 5th, Chutes processed around 104 billion tokens on OpenRouter, and even Nous Research's brand-new Hermes 4 70B is already running on their infrastructure with 99.7% uptime and 1.55s latency.

Try it at chat.chutes.ai while it's in beta.

Templar Shatters Distributed Training Records

While Big Tech burns billions on centralized training infrastructure, Templar (Subnet 3 on Bittensor) is quietly changing how we build foundation models through decentralized pretraining.

Their validator loss just dipped below 2.0 on a 79-billion-parameter model. For context, that's fucking impressive for distributed training across heterogeneous compute resources. The miners are getting smarter too, hitting 2.77 and dropping on a 70B parameter model.

Distributed State, isn't being modest about their achievement: "We have smashed the Pareto frontier on model size. Nous Research abandoned their 40B run, and PrimeIntellect's largest pre-train run is 10B. With grail, we plan to repeat this feat with post-training."

The implications are massive. If decentralized networks can achieve state-of-the-art training results at this scale, the entire premise of needing billion-dollar training budgets starts to crumble.

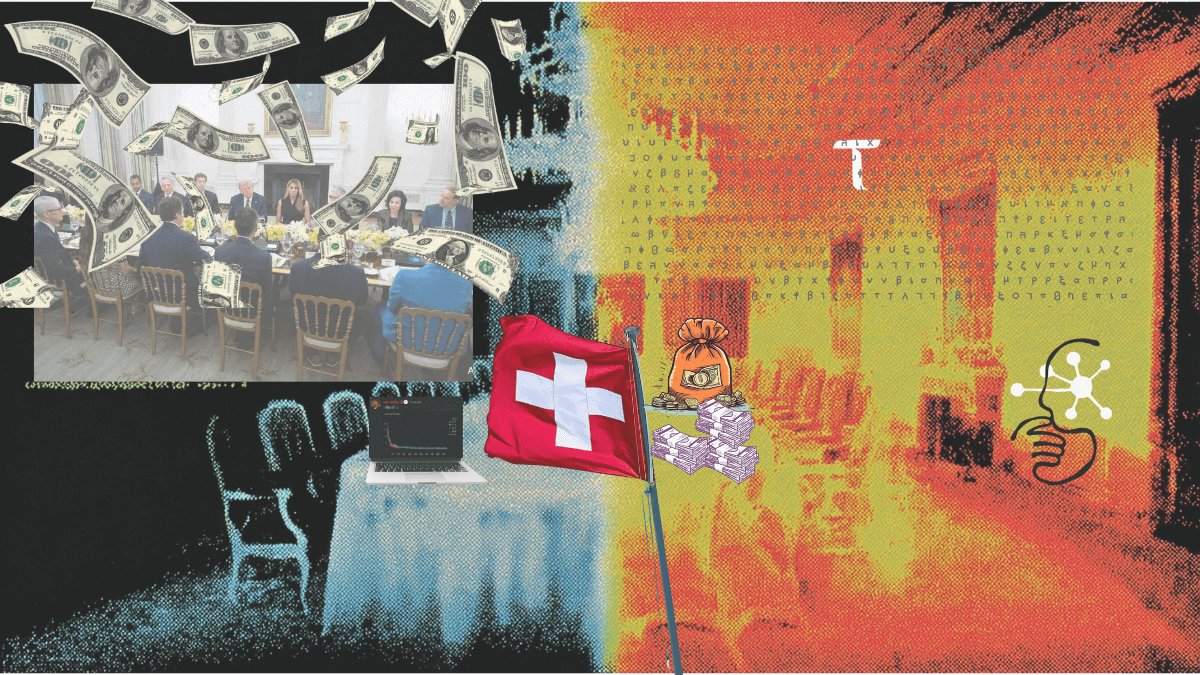

Trump's Tech Dinner: The New Power Structure

Thursday night's White House dinner revealed the evolving power dynamics in AI. Trump hosted Mark Zuckerberg, Tim Cook, Bill Gates, Sam Altman, Sundar Pichai, and other tech luminaries in the State Dining Room.

The most telling detail? Elon Musk didn't show up, citing "scheduling conflicts" amid his ongoing feud with Trump. Zuckerberg seems to have claimed the spot as Trump's primary tech advisor, a shift that could reshape AI policy direction.

The dinner followed Melania Trump's AI education task force announcement and focused on chip manufacturing investments and hands-off AI regulation. Translation: Big Tech wants minimal government interference while they consolidate control over AI development.

But here's what they're missing: while they're dining and dealmaking, decentralized networks are building better solutions faster and cheaper.

Switzerland Launches National AI Model

Switzerland just entered the AI race with Apertus, their open-source national LLM developed by public institutions including EPFL, ETH Zurich, and the Swiss National Supercomputing Centre.

Apertus was trained on 15 trillion tokens across 1,000+ languages, with 40% non-English data including Swiss German and Romansh. The key differentiator: complete transparency. They released the model, documentation, source code, training process, and datasets.

More importantly, Apertus was built to comply with Swiss data protection and copyright laws, making it attractive for European companies dealing with strict regulations. The Swiss Bankers Association sees "great long-term potential" for a homegrown model that respects their bank secrecy rules.

Available in 8B and 70B parameter versions via Swisscom and Hugging Face.

Google Drops EmbeddingGemma

Google DeepMind released EmbeddingGemma, a 308M parameter embedding model designed for on-device AI. At just 200MB of RAM requirements and 2048 token context length, it's optimized for situations where internet connectivity isn't guaranteed.

The model hits the top of the MTEB benchmark for text embedding evaluation and supports 100+ languages. It's based on Gemma 3 270M and represents the highest-ranking open model under 500M parameters.

While not revolutionary, it's another piece of the puzzle for truly local AI deployments.

Deepfake Protection Gains Bipartisan Support

A Boston University survey found that 84% of Americans want protection against AI deepfakes, with support crossing party lines (84% Republicans, 90% Democrats). The backing extends to labeling AI-generated content, removing unauthorized deepfakes, and licensing rights for voice and likeness.

While lawmakers debate policy, BitMind continues building practical solutions, recently launching their mobile app for real-time deepfake detection across social media platforms.

TAO Trading Platforms Heat Up

The battle for TAO analytics supremacy got more interesting this week. Safello, the leading Nordic crypto exchange, launched Wu-TAO to compete with Taostats and TAO.app.

Wu-TAO introduces live community chat and a subnet rating system covering token liquidity, price health, technical maturity, and on-chain performance. Most categories are still missing data, but it's a start.

The platform includes hip-hop tracks from "nAvi the NORTH," because apparently we're adding vibes to crypto analytics now. The real test will be whether they can compete long-term, especially with TAO.com's big platform launch approaching.

Anthropic Raises $13 Billion While Getting Outpaced by Teenagers

Speaking of absurd valuations, Anthropic just closed a $13 billion funding round at a $183 billion post-money valuation. That's triple their March valuation, bringing their run-rate revenue to $5 billion from $1 billion at the start of the year.

Here's the mindfuck: while investors are throwing billions at Anthropic for their 75% SWE-Bench performance that cost them hundreds of millions to achieve, a 21-year-old founder just hit 80% on the same benchmark for $650,000.

Anthropic's finance chief Krishna Rao called the funding "extraordinary confidence in our financial performance." What he didn't mention is that their entire business model just got undermined by decentralized networks achieving comparable results for 1/200th the cost.

The timing couldn't be more perfect. As Anthropic celebrates their $183 billion valuation, Ridges AI is demonstrating that their entire value proposition is built on a false premise: that you need massive centralized resources to build competitive AI systems.

VCs are literally funding their own obsolescence.

DHH Drops Truth Bombs at Rails World

Ruby on Rails creator David Heinemeier Hansson delivered a keynote at Rails World in Amsterdam that perfectly captures the open source ethos driving this revolution. His message was crystal clear: "You should have the power to change anything and everything about your computer, your framework, and your server. End-to-end open source is your ticket to tell Apple — or anyone! — to go fuck themselves when they seek to dictate how you compute."

The full tweet encapsulates exactly why decentralized AI networks matter. When you control the entire stack from hardware to software, no corporation can gate your access or extract rent from your innovation.

Kimi K2 Gets Supercharged

Moonshot AI dropped Kimi K2-0905, a significant upgrade to their already impressive model. The updates include enhanced coding capabilities (especially front-end and tool-calling), extended context length to 256K tokens, and improved integration with agent scaffolds like Claude Code and Roo Code.

For developers needing guaranteed 100% tool-call accuracy at 60-100 TPS, their turbo API is worth checking out. The model is available on Hugging Face and their chat platform.

Google's Nano Banana Drops

Google announced Nano Banana (aka Gemini 2.5 Flash Image), which they're calling "the world's most powerful image editing and generation model." It's available for "free" in the Gemini app, though Google's track record with bold claims about their AI capabilities suggests taking this with a grain of salt.

What This Week Means

The pattern is becoming clear: while centralized AI companies focus on consolidating power through political influence and billion-dollar funding rounds, decentralized networks are building superior technology with transparent, open development.

Chutes is processing 100+ billion tokens monthly. Templar is achieving state-of-the-art training results on 79B parameter models. Switzerland is building national AI infrastructure with complete transparency. Meanwhile, Big Tech CEOs are having dinner parties and complaining about regulation.

The divergence accelerates every week. Decentralized AI isn't just competitive, it's becoming demonstrably superior on performance, cost, and innovation speed.