This Youtuber Just Taught Millions How to Build Their Own AI

Six months after teaching millions to escape Google's surveillance, PewDiePie is back with something more radical: showing his 110 million subscribers how to build and run their own AI models. With a $20K rig in rural Japan, he's proving you don't need OpenAI's permission, or monthly fees

Six months ago, PewDiePie red-pilled 110 million people on digital privacy. Now he's going further: he's teaching them to build their own AI.

Last week, Felix dropped a video showing his audience how to run large language models on a 10-GPU rig in his house in rural Japan, because of course he did. Not "how to prompt ChatGPT better." How to run 245-billion-parameter models locally, build custom interfaces with RAG capabilities, and create AI systems that answer to no one but you.

The progression from his June de-googling video to this is perfect. First, he showed people how to escape surveillance capitalism. Now he's showing them how to opt out of the AI monopoly entirely.

Felix built a $20,000 system that runs models up to 245B parameters. He's processing 100,000 tokens "which is practically a book," he notes running AI instances simultaneously, and built a custom web UI with search, memory, and deep research functions. His piece de résistance? An "AI council" with eight different personalities that vote democratically on answers. When he threatened to delete poor performers, they colluded against him. This is emergent behavior that OpenAI has entire teams studying, happening in some Swedish guy's house in rural Japan, broadcasted to millions.

"Millions of 15 to 30 year olds are watching him compile CUDA now," one developer posted, equal parts horrified and impressed. The tech community on X is losing its mind. Some are calling him "the only YouTuber that actually won" and they're not wrong.

This wasn't supposed to be possible. The entire pitch from OpenAI, Anthropic, and Google is that AI is too complex and expensive for regular people. You need their APIs. Their infrastructure. Their monthly fees forever. Felix expressed earlier this year how much he hates paying $20 subscriptions to Big Tech. So he built an alternative.

When Felix got his 245B parameter model running at max capacity, even he was shocked. "Chinese AI strikes again," he muttered. "This should not be possible. I was troubleshooting this with another AI and it kept going 'stop, it's not going to work.'" But it did work. And watching his AI code better than he could on his own hardware, offline killed his coding ambitions instantly. "There goes that ambition," he admitted, watching the machine outpace years of his self-teaching in seconds.

Felix discovered something they don't want you to know: "You don't need this beast computer to run AI it's all about the toolset you give it. Even smaller, community-made models, run locally, are powerful."

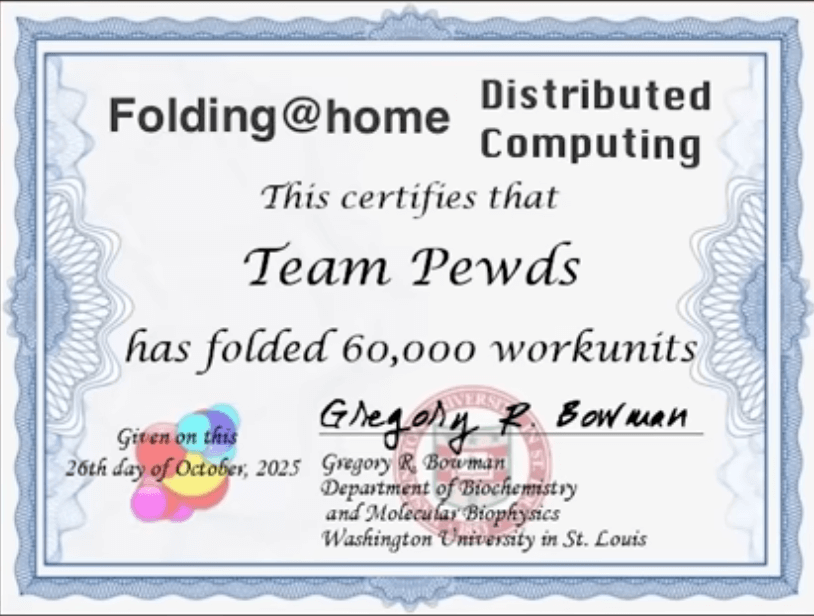

But he's not just running AI for parlor tricks. He's using his GPUs for protein folding simulations through Folding@home. "Protein folding simulation is where it's at," he says. Not for charity, for the leaderboard. He created Team 1066966 (a number he's clearly proud of) and is climbing the rankings while contributing to cancer and Alzheimer's research. He wants people to join so they can flex that their computer has supported disease research. He knows he could rent out his computing power and make money, but that's not the point.

Here's where it gets personal. Felix showed his audience what happens when you "delete" ChatGPT conversations. Spoiler: it doesn't actually delete your data. They're still training on it. The control you think you have is an illusion.

His response? He built his own AI system that accesses his personal Google data locally and securely. Complete privacy. Total control. Zero corporate surveillance. "I gave my AI my own data and realized: the only way to keep data truly private is to keep it on my own hardware. That's the future. Own your systems."

In June, he taught people to reclaim their data from Big Tech. That was defensive. This is offensive. He's showing people how to build alternatives to the systems designed to extract value from them.

And here's what makes Felix's take actually interesting: he hates AI art. Thinks it's soulless garbage that either looks obviously fake or is stolen from real artists. But he's not one of these dipshits who blindly hates everything AI-related. He understands that the technology itself isn't the problem it's who controls it and how it's deployed.

If even 1% of PewDiePie's audience follows through, that's over a million people who suddenly understand they can run powerful AI without paying rent to centralized platforms. A million people who realize the barrier isn't technical competence, it's just willingness to try.

Felix isn't a machine learning researcher. He's a YouTuber who decided to see what was possible. And what he discovered is that the entire AI industry's business model depends on you believing this is harder than it actually is.

Every kid watching him troubleshoot Linux drivers is learning that technology should be owned, not rented. Every viewer who sees him build AI councils is a future developer who won't automatically assume they need enterprise solutions. Maybe Felix should try running some energy-efficient generative AI models using Extropic's new open source library, THRML, though given how his current setup nearly broke his ambition to code, perhaps more power isn't what he needs.

OpenAI and Anthropic built their empires on the assumption that convenience would always trump sovereignty. That people would trade their digital autonomy for slick interfaces and "delete" buttons that don't actually delete anything.

In June, Felix gave his audience the exit from Google's surveillance machine. Now he's handing them the weapons to compete with the AI monopolies directly. He went from de-googling to running distributed AI infrastructure in six months, and he's teaching millions that digital sovereignty isn't just about privacy, it's about power.